CUDA基础 [2]:Get Started

CUDA(Compute Unified Device Architecture)计算统一设备框架

GPU架构特点

-

核的角度:首先CPU由专为顺序串行处理而优化的几个核心组成。而GPU则由数以千计的更小、更高效的核心组成,这些核心专门为同时处理多任务而设计,可高效地处理并行任务。也就是,CPU虽然每个核心自身能力极强,处理任务上非常强悍,无奈他核心少,在并行计算上表现不佳;反观GPU,虽然他的每个核心的计算能力不算强,但他胜在核心非常多,可以同时处理多个计算任务,在并行计算的支持上做得很好。GPU和CPU的不同硬件特点决定了他们的应用场景,CPU是计算机的运算和控制的核心,GPU主要用作图形图像处理。图像在计算机呈现的形式就是矩阵,我们对图像的处理其实就是操作各种矩阵进行计算,而很多矩阵的运算其实可以做并行化,这使得图像处理可以做得很快,因此GPU在图形图像领域也有了大展拳脚的机会。

-

数据处理角度:CPU需要很强的通用性来处理各种不同的数据类型,比如整型、浮点数等,同时它又必须擅长处理逻辑判断所导致的大量分支跳转和中断处理,所以CPU其实就是一个能力很强的伙计,他能把很多事处理得妥妥当当,当然啦我们需要给他很多资源供他使用(各种硬件),这也导致了CPU不可能有太多核心(核心总数不超过16)。而GPU面对的则是类型高度统一的、相互无依赖的大规模数据和不需要被打断的纯净的计算环境,GPU有非常多核心(费米架构就有512核),虽然其核心的能力远没有CPU的核心强,但是胜在多,在处理简单计算任务时呈现出“人多力量大”的优势,这就是并行计算的魅力。

异构计算:不同体系结构的处理器相互协作完成计算任务,并行部分在GPU上运行,串行部分在CPU运行。CPU负责总体的程序流程,而GPU负责具体的计算任务,当GPU各个线程完成计算任务后,我们就将GPU那边计算得到的结果拷贝到CPU端,完成一次计算任务。

CUDA线程模型

线程模型(软件角度)

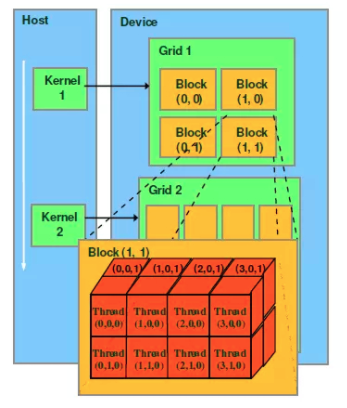

kernel在device上执行时实际上是启动很多线程,一个kernel所启动的所有线程称为一个网格(grid),同一个网格上的线程共享相同的全局内存空间,grid是线程结构的第一层次,而grid又可以分为很多线程块(block),一个线程块里面包含很多线程,这是第二层次。

-

Thread:线程,并行的基本单位;

-

Thread Block:线程块,互相合作的线程组,线程块有如下几个特点:- 允许彼此同步;

- 可以通过共享内存快速交换数据;

- 可以以1维、2维或3维组织(一般为3维);

- 每一个

block和每个thread都有自己的ID(blockIdx和threadIdx),我们通过相应的索引找到相应的线程块和线程。

-

Grid:一组线程块- 可以以1维、2维或3维组织(一般为2维);

- 共享全局内存;

grid和block都是定义为dim3类型的变量,dim3可以看成是包含三个无符号整数(x, y, z)成员的结构体变量,在定义时,缺省值初始化为1;dim3仅为host端可见,其对应的device端类型为uint3。

-

Warp:GPU执行程序时调度和运行的单位(是SM的基本执行单元),目前cuda的warp的大小为32;同在一个warp的线程,以不同数据资源执行相同的指令,这就是所谓SIMT(Single Instruction Multiple Threads,单指令多线程)。

-

Kernel:在GPU上执行的核心程序,这个kernel函数是运行在某个Grid上的。One kernel <==> One Grid

流处理器(硬件角度)

-

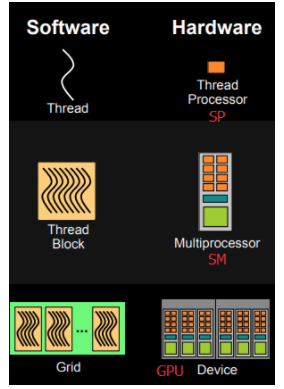

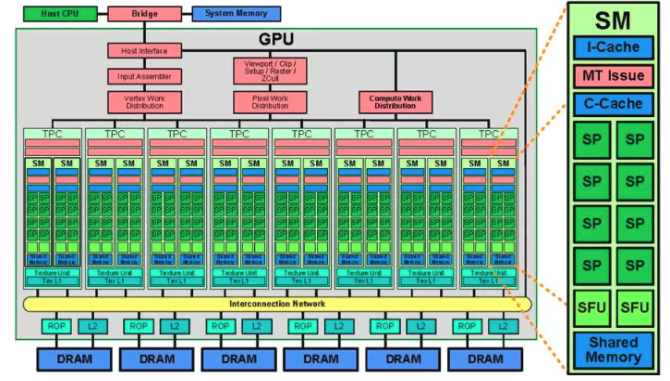

SP(streaming processor):也称CUDA core。最基本的处理单元,具体的指令和任务都是在SP上处理的。一个SP可以执行一个thread;

-

SM(streaming multiprocessor):也叫GPU大核,由多个SP加上其他资源(如:warp scheduler,register,shared memory等)组成。block在SM上执行。- SM可以看做GPU的心脏(对比CPU核心),

register和shared memory是SM的稀缺资源。CUDA将这些资源分配给所有驻留在SM中的threads。因此,这些有限的资源就使每个SM中active warps有非常严格的限制,也就限制了并行能力; - 每个SM包含的SP数量依据GPU架构而不同,Fermi架构GF100是32个,GF10X是48个,Kepler架构都是192个,Maxwell架构都是128个;

- SM上并不是所有的thread能够在同一时刻执行。Nvidia把32个threads组成一个warp,warp是调度和运行的基本单元。warp中所有threads并行的执行相同的指令。一个warp需要占用一个SM运行,多个warps需要轮流进入SM。由SM的硬件warp scheduler负责调度。目前每个warp包含32个threads(Nvidia保留修改数量的权利)。所以,一个GPU上resident thread最多只有

SM * warp个。 - block一旦被分配好SM,该block就会一直驻留在该SM中,直到执行结束。一个SM可以同时拥有多个blocks,但需要序列执行。

- SM可以看做GPU的心脏(对比CPU核心),

对应关系&硬件结构

内存模型

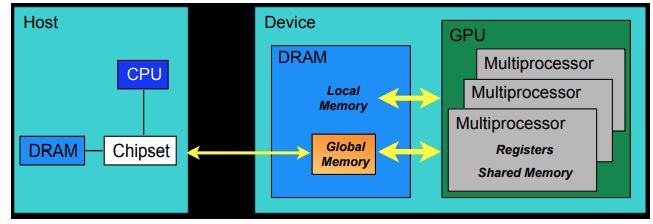

CUDA中的内存模型分为以下几个层次:

-

- 每个线程都有自己的registers(寄存器);

-

- 每个线程都有自己的local memory(局部内存);

-

- 每个线程块内都有自己的shared memory(共享内存),线程块内的所有线程共享这段内存资源

-

- 每个grid都有自己的global memory(全局内存),不同线程块的线程都可使用

-

- 每个grid都有自己的constant memory(常量内存)和texture memory(纹理内存),不同线程块的线程都可使用。

线程访问这几类存储器的速度是register > local memory >shared memory > global memory

编程模型

我们怎么写一个能在GPU跑的程序或函数呢?

| Executed on the: | Only callable from the: | |

|---|---|---|

__device__ float DeviceFunc() |

device | device |

__global__ void KernelFunc() |

device | host |

__host__ float HostFunc() |

host | host |

如上表所示,我们用__global__定义一个kernel函数,就是CPU上调用,GPU上执行,注意__global__函数的返回值必须设置为void。

CPU和GPU间的数据传输怎么写?

-

cudaMalloc():在设备端分配global memory

-

cudaFree():释放存储空间

-

-

cudaMemcpy(void *dst, void *src, size_t nbytes,enum cudaMemcpyKind direction):数据传输enum cudaMemcpyKind:传输方向

- cudaMemcpyHostToDevice(CPU到GPU)

- cudaMemcpyDeviceToHost(GPU到CPU)

- cudaMemcpyDeviceToDevice(GPU到GPU)

-

怎么用代码表示线程组织模型?

用dim3类来表示网格和线程块的组织方式,网格grid可以表示为一维和二维格式,线程块block可以表示为一维、二维和三维的数据格式。

dim3 DimGrid(100, 50); //5000个线程块,维度是100*50 |

线程索引方式速查

|

![现代硬件算法[8.8]: 时空局部性](/images/pd_09.webp)

![现代硬件算法[8.7]: 缓存遗忘算法](/images/pd_08.webp)

![现代硬件算法[8.6]: 淘汰策略](/images/pd_07.webp)

![现代硬件算法[8.5]: 列表排名](/images/pd_06.webp)

![现代硬件算法[8.4]: 外部排序](/images/pd_05.webp)